For the new semester, the University of Mainz's AI chat is getting an update with new features and an improved user interface.

Clearer Interface & new Icon

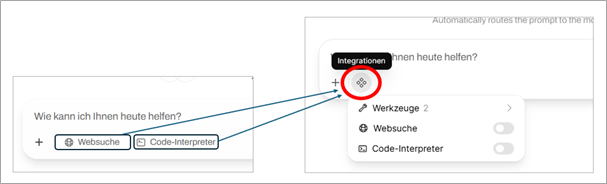

In the chat window, you will find a new icon to the right of the plus sign +: the hash symbol

When you hover over the icon with the mouse, the label Integrations appears. Clicking on it opens a menu with three integrations:

- Tools

- Web search

- Code interpreter

Web search and code interpreter were previously located below the chat window.

New Tools in AI Chat

You can access the tools via the new hash symbol. You must reactivate the tools for each new chat.

1.Automatic Web Search Function

- The automatic web search is available to the model as a tool, which it uses as needed, multiple times if necessary. This can be useful, for example, for complex questions and multi-step solutions.

- Unlike simple web search, the model itself decides whether or not to use the web search tool.

2.Automatic Web Extraction Function

- Automatic web extraction is also available to the model as a tool that it can use as needed.

- This allows it to retrieve URLs specified in the chat or known elsewhere and obtain the page content. The tool is an alternative to manually attaching website content.

Automatic Model Preselection

The new “Auto” mode is now selected by default for new chats. This means that the AI chat automatically selects the appropriate model for your request. In this mode, you no longer need to select a model manually.

The process uses a language model to analyze your input and decide which AI model is most suitable.

It checks

- whether your request contains images

- whether it is a knowledge query, a creative task, or something else

- the difficulty level of the question

The process does not evaluate by subject area, but by the nature of the request. The selected model is displayed to you after selection.

You can still select the desired model for a chat yourself, thereby bypassing the automatic selection.

Model Replacement

The Qwen3 235B model is being replaced by the new Qwen3 235B VL variant, which now also recognizes and can process images. Gemma 3 27B, which was previously used for image processing, is therefore being removed from the model selection. All image requests will be processed via Qwen3 235B VL in the future.

Since Qwen3 Coder 30B now only fulfills niche functions (e.g., as a “task” model or for FIM) and better alternatives are available in the chat context, it can now only be used via the API.

Extended Documentation

You can find new comprehensive documentation on agentic coding and API usage on our websites.

https://www.en-zdv.uni-mainz.de/ai-chat-agentic-coding/

https://www.en-zdv.uni-mainz.de/ai-chat-api-usage/

![]()

More news from the Data Center → may be found here.