The ZDV is part of the European joint project ADMIRE, which has set itself the goal of developing a new adaptive storage system for high-performance computers. In cooperation with fourteen institutions from six countries, ADMIRE aims to significantly increase the running time of applications in the areas of weather forecasting, molecular dynamics, turbulence simulation, cartography, brain research and software cataloging.

The research team at the ZDV "Efficient Computing and Storage" focuses on the so-called ad-hoc file systems, which are controlled by the overall ADMIRE system for applications if required. This should significantly increase the overall I / O performance for applications and relieve the central parallel file systems (such as Luster). The GekkoFS file system, a ZDV in-house development, is used as the basis. This is adapted to the requirements of modern applications in high-performance computers so that the file system provides the highest possible I / O performance and can react dynamically to decisions made by the overall ADMIRE system.

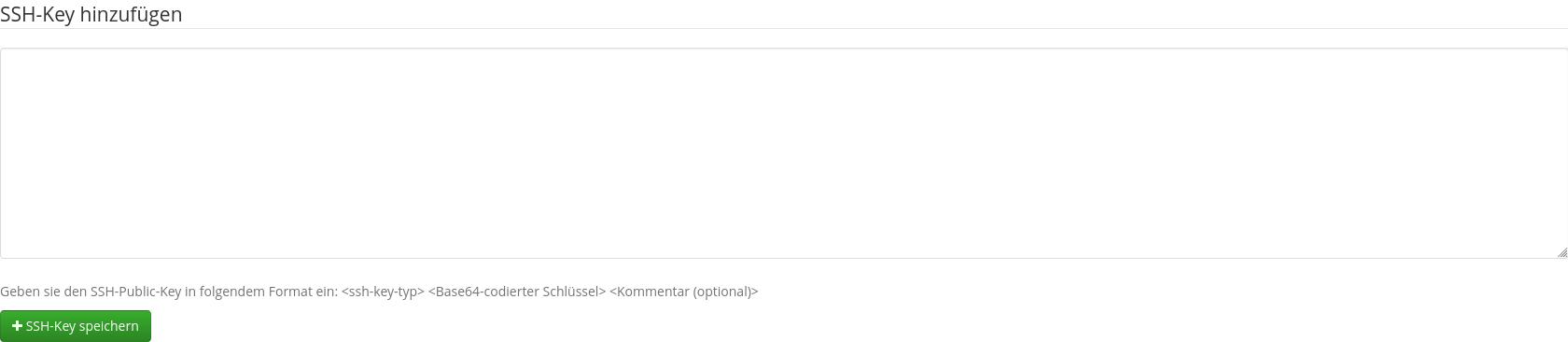

The ADMIRE project at a glance © ADMIRE

Additional information: https://www.uni-mainz.de/presse/aktuell/13932_ENG_HTML.php

![]()

More news from the Data Center → may be found here.